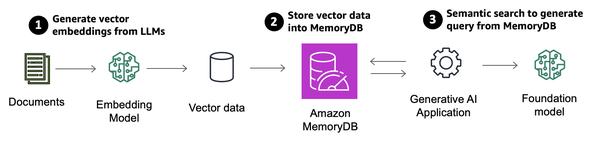

Amazon Web Services (AWS) has announced the general availability of vector search for Amazon MemoryDB. This new capability allows developers to store, index, retrieve, and search vector data in real time, making it ideal for real-time machine learning (ML) and generative AI applications.

MemoryDB is a durable, in-memory database service that delivers ultra-fast performance. With vector search, MemoryDB offers an efficient way to find related data based on similarity rather than exact matches. This feature can be used in a variety of use cases, including:

* **Retrieval-Augmented Generation (RAG):** Vector search can be used to retrieve relevant passages from a large corpus of data to augment a large language model (LLM).

* **Low latency durable semantic caching:** Vector search can be used to store previously inferred results from the foundation model (FM) in-memory, reducing computational costs and improving performance.

* **Real-time anomaly (fraud) detection:** Vector search can be used to detect fraudulent transactions by comparing new transactions to known fraudulent transactions.

Vector search for Amazon MemoryDB is a powerful addition to the AWS platform, providing developers with an efficient and fast way to work with vector data. This feature will help companies build more intelligent and efficient applications in a variety of industries.