Google Cloud published a practical guide on how to maximize LLM serving throughput for GPUs on GKE.

The blog post addresses the challenge of serving large language models (LLMs) cost-effectively. GKE, with features like workload and infrastructure autoscaling and load balancing, offers a solution for cost-efficient LLM serving.

The blog post provides practical recommendations for maximizing serving throughput on NVIDIA GPUs on GKE, including:

* **Deciding whether to quantize the model and which quantization to use.** FP16 and Bfloat16 quantization provide virtually the same accuracy as FP32 with half the memory usage.

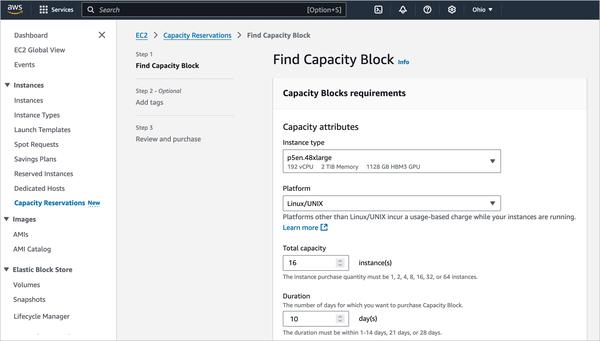

* **Choosing a machine type to fit the model.** Choosing the right machine type depends on the number of parameters in the model and the data type of the model weights.

* **Choosing the right GPU.** GKE offers a variety of VMs powered by NVIDIA GPUs. Choosing the right GPU depends on the model characteristics and performance requirements.

Additionally, the blog post discusses how to optimize a model server platform for a given inference workload, including:

* **Optimizing for input-heavy vs. output-heavy use cases.** LLM inference involves two phases: prefill and decode.

* **How batching affects performance.** Batch requests are essential for achieving higher throughput as they utilize more GPU memory, HBM bandwidth, and GPU FLOPS without increasing cost.

Overall, the blog post provides practical guidance for maximizing LLM serving throughput on GPUs on GKE. By following these recommendations, organizations can minimize the cost of serving LLMs while still providing high performance.