Google Cloud announced new text embedding models in Vertex AI, "text-embedding-004" and "text-multilingual-embedding-002," which can generate optimized embeddings based on "task types." This is a significant development for Retrieval Augmented Generation (RAG) applications.

Traditional semantic similarity search often fails to deliver accurate results in RAG because questions and answers are inherently different. For example, "Why is the sky blue?" and its answer, "The scattering of sunlight causes the blue color," have distinct meanings.

"Task types" bridge this gap by enabling models to understand the relationship between a query and its answer. By specifying "QUESTION_ANSWERING" for query texts and "RETRIEVAL_DOCUMENT" for answer texts, the models can place embeddings closer together in the embedding space, leading to more accurate search results.

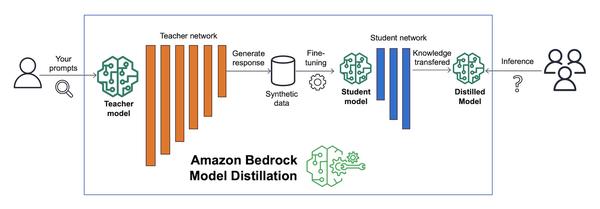

These new models leverage "LLM distillation," where a smaller model is trained from a Large Language Model (LLM). This allows the embedding models to inherit some of the reasoning capabilities of LLMs, improving search quality while reducing latency and cost.

In conclusion, "task types" in Vertex AI Embeddings are a significant step towards improving the accuracy and efficiency of RAG systems. By simplifying semantic search, this feature empowers developers to build more intelligent, language-aware applications.