Confluent, in collaboration with Google Cloud, has released a blog post illustrating how organizations can leverage large language models (LLMs) to automate SQL query generation, streamlining data analytics workflows. The article presents a powerful, end-to-end solution for real-time data processing and insights by integrating LLMs with Confluent and Vertex AI.

What particularly caught my eye was the ability of LLMs to empower business users with limited SQL expertise to efficiently explore datasets. By leveraging natural language prompts, users can interact with the system and gain valuable insights without the need to formulate complex SQL queries.

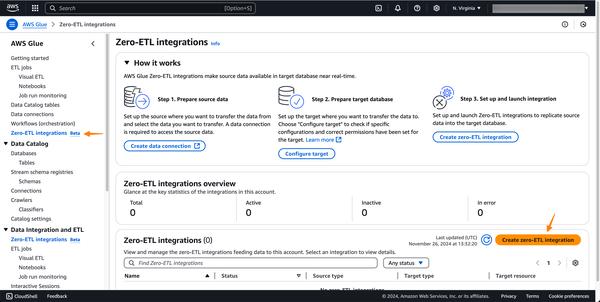

One of the key problems addressed by this technology is the challenges associated with writing complex SQL queries. Writing and optimizing such queries often requires specialized data engineering skills and is time-consuming. By automating this process using LLMs, organizations can save time and resources while reducing the risk of errors.

Furthermore, integrating LLMs with Confluent's real-time streaming capabilities addresses the issue of real-time data analysis. Unlike traditional batch processing methods, which often lack the speed and agility needed for real-time decision-making, this solution ensures that insights are readily available, enabling businesses to make proactive decisions.

Overall, I find the integration of LLMs, Confluent, and Vertex AI to be a significant step forward in the field of data analytics. By automating SQL query generation and enabling real-time streaming, this solution empowers organizations to overcome traditional challenges and unlock the full value of their data.