Amazon Web Services (AWS) has announced new updates to Guardrails for Amazon Bedrock, designed to enhance the safety and trustworthiness of generative AI applications. These updates include hallucination detection and an independent API to fortify applications with customized guardrails across any model, ensuring responsible and trustworthy outputs.

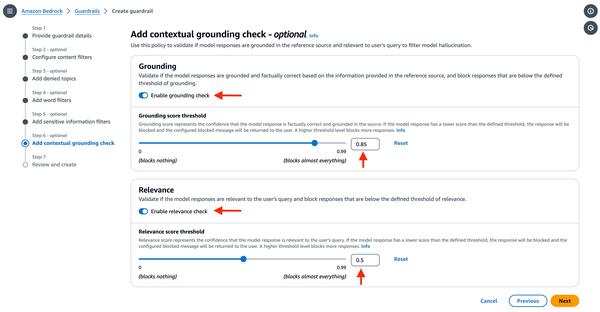

The hallucination detection feature is a valuable addition, allowing customers to verify the accuracy of model responses based on a reference source and the user's query. This helps ensure that the model is not generating incorrect or novel information that is not grounded in factual data.

The ApplyGuardrail API enables customers to evaluate user inputs and model responses against configured safeguards. This feature is particularly useful for organizations using self-managed or third-party LLMs, as it allows them to apply standardized safety measures across all their applications.

With these updates, AWS reaffirms its commitment to providing tools and solutions that empower customers to build secure and reliable generative AI applications. Guardrails for Amazon Bedrock is a significant step in this direction, offering customers greater control over the behavior of the LLMs they utilize.

Customers interested in learning more about these new features can visit the Guardrails for Amazon Bedrock product page on the AWS website.