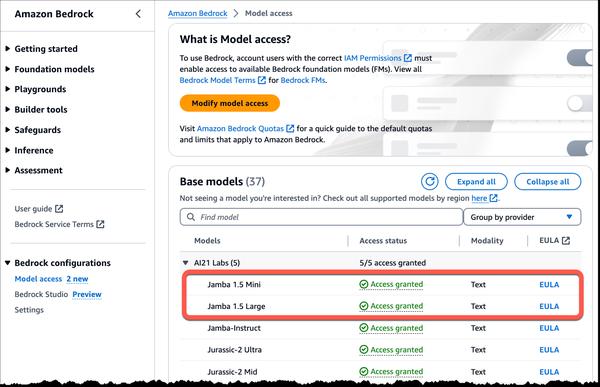

AWS and AI21 Labs announced the availability of AI21 Labs' powerful new Jamba 1.5 family of large language models (LLMs) in Amazon Bedrock. These models represent a significant advancement in long-context language capabilities, delivering speed, efficiency, and performance across a wide range of applications.

The Jamba 1.5 models leverage a unique hybrid architecture that combines the transformer model architecture with Structured State Space model (SSM) technology. This innovative approach allows Jamba 1.5 models to handle long context windows up to 256K tokens, while maintaining the high-performance characteristics of traditional transformer models.

Some of the key strengths of the Jamba 1.5 models include:

* Long context handling: With a 256K token context length, Jamba 1.5 models can improve the quality of enterprise applications, such as lengthy document summarization and analysis, as well as agentic and RAG workflows.

* Multilingual: Support for English, Spanish, French, Portuguese, Italian, Dutch, German, Arabic, and Hebrew.

* Developer-friendly: Native support for structured JSON output, function calling, and capable of digesting document objects.

* Speed and efficiency: AI21 measured the performance of Jamba 1.5 models and shared that the models demonstrate up to 2.5X faster inference on long contexts than other models of comparable sizes.

The Jamba 1.5 models are perfect for use cases like paired document analysis, compliance analysis, and question answering for long documents. They can easily compare information across multiple sources, check if passages meet specific guidelines, and handle very long or complex documents.

AI21 Labs’ Jamba 1.5 family of models is generally available today in Amazon Bedrock in the US East (N. Virginia) AWS Region.

I believe that these models represent a significant step forward in the field of language processing. The ability to handle long contexts opens up a wide range of new possibilities for developers and businesses. I am excited to see what innovative applications will be developed using these models.